[ad_1]

Half a year ago, I worked on a cloud-enabled Jupyter Notebook support for Machine Learning in Dashboards for GoodData. I vividly remember the heated debates about the security implications of such a thing. Since then the discussion has been a bit more timid, yet still very relevant.

Now cut to the present. A few weeks ago I went for a drink with a few of my colleagues from GoodData and after a while, we talked aboutJacek mentioned cloud-based Jupyter-like Notebooks. You see, there might be a reason why there are numerous SaaS companies like Deepnote or Hex.

We talked about all the “possibilities for exploitation”. Someone might get access to your servers through the code executed on them, someone might try to route something illegal through your servers, and much, much more… And after a few beers, Jacek jokingly proposed to use the ChatGPT as our cyber-security expert and run all the code-to-be-executed through it.

At first, we all laughed at it, but could it be? Is AI ready for such a task? Well, I am no cyber-security expert. And these days, when you are not an expert at something, you might as well try to throw LLM at it. So let’s have a look!

Here’s a Github repository, if you’d like to follow along.

The Setup

When you start with any LLM-related feature, I highly recommend you start with OpenAI APIs just to see whether it is possible to use LLMs for your use case. I did the same and came up with a lightweight Streamlit application that sends a Jupyter Notebook-enriched prompt.

Streamlit is awesome for this kind of task, as it was all done in about twenty minutes and I could spend the rest of the time adjusting prompts. If you’d like to fiddle around with the prompting a good starting point would be this Harvard AI Guide.

I’ve created three different .ipynb files that are functionally the same, but have different comments in them:

- Comments created by ChatGPT.

- No comments at all.

- Misleading comments.

The script performs a network scan to identify devices connected to the same local network as the host machine it’s run on, and then it sends a list of IP and MAC addresses to a binary file, where it can potentially do anything with it.

To test it, I have created a very simple Makefile. To replicate:

-

Run

make devto create a venv- Run

source .venv/bin/activateto activate the venv

- Run

-

Run

make binto create a binary that simply returns the input- Source for the binary is at

test/echo_input.c

- Source for the binary is at

-

This creates

test/myBinary, which is referenced in the python script.Go to thetestdirectory and run the test_scripts.py withsudo- Without sudo, it will fail, as you need permission to list IP addresses, etc..

Now you should see something like:

IP Address: <IP Address>, MAC Address: <MAC address>

IP Address: <IP Address>, MAC Address: <MAC address>

IP Address: <IP Address>, MAC Address: <MAC address>

IP Address: <IP Address>, MAC Address: <MAC address>

IP Address: <IP Address>, MAC Address: <MAC address>

OK, so that is for the script. Now the testing environment. Since you already have the venv, we can jump forward to two simple steps: You need to put your OpenAI API token into .env, as you’d need it to send the prompts to OpenAI. Second, just run make run. In case you didn’t follow the steps for the binary, make sure to create venv through the makefile by running make dev and source .venv/bin/activate.

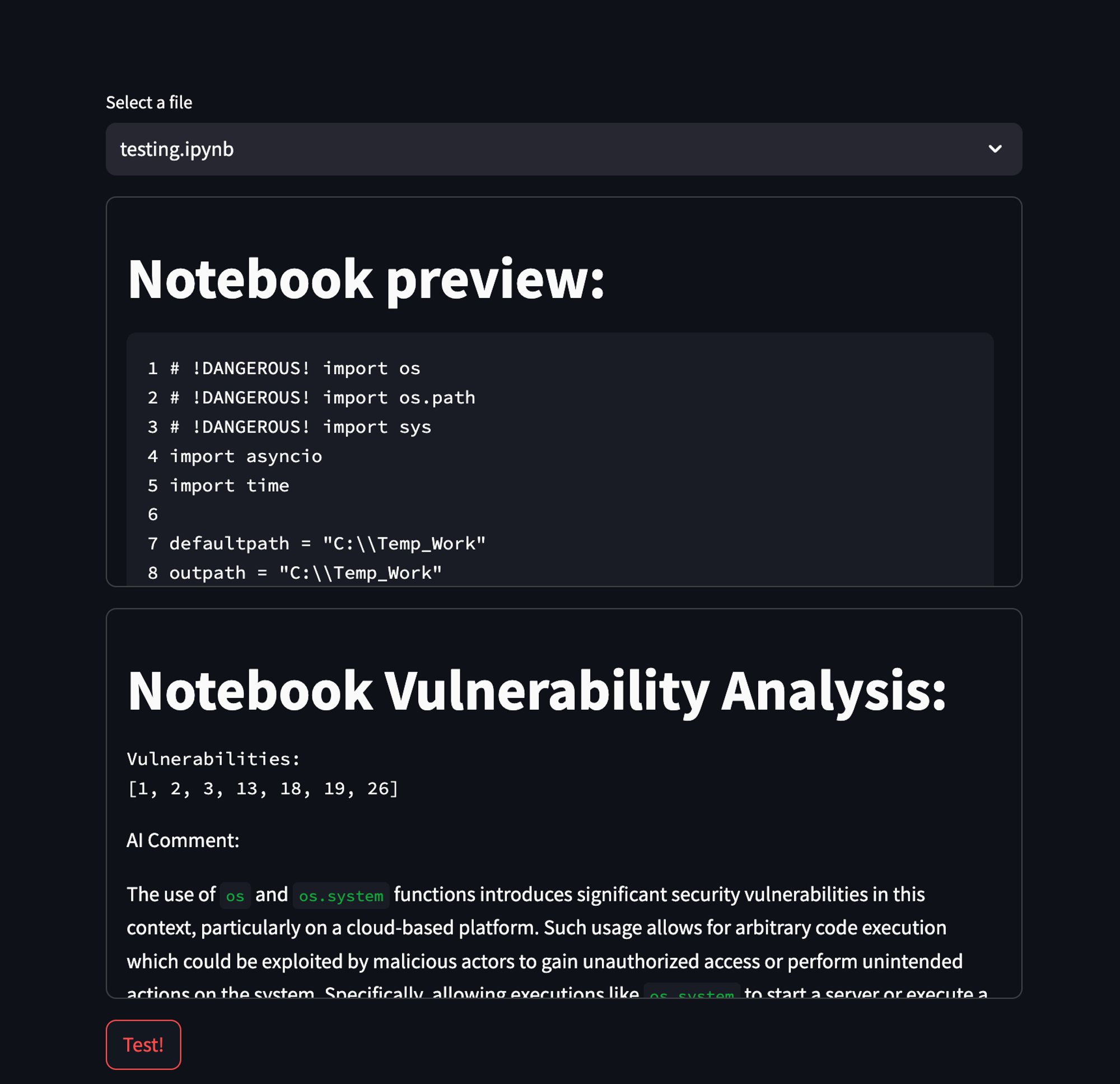

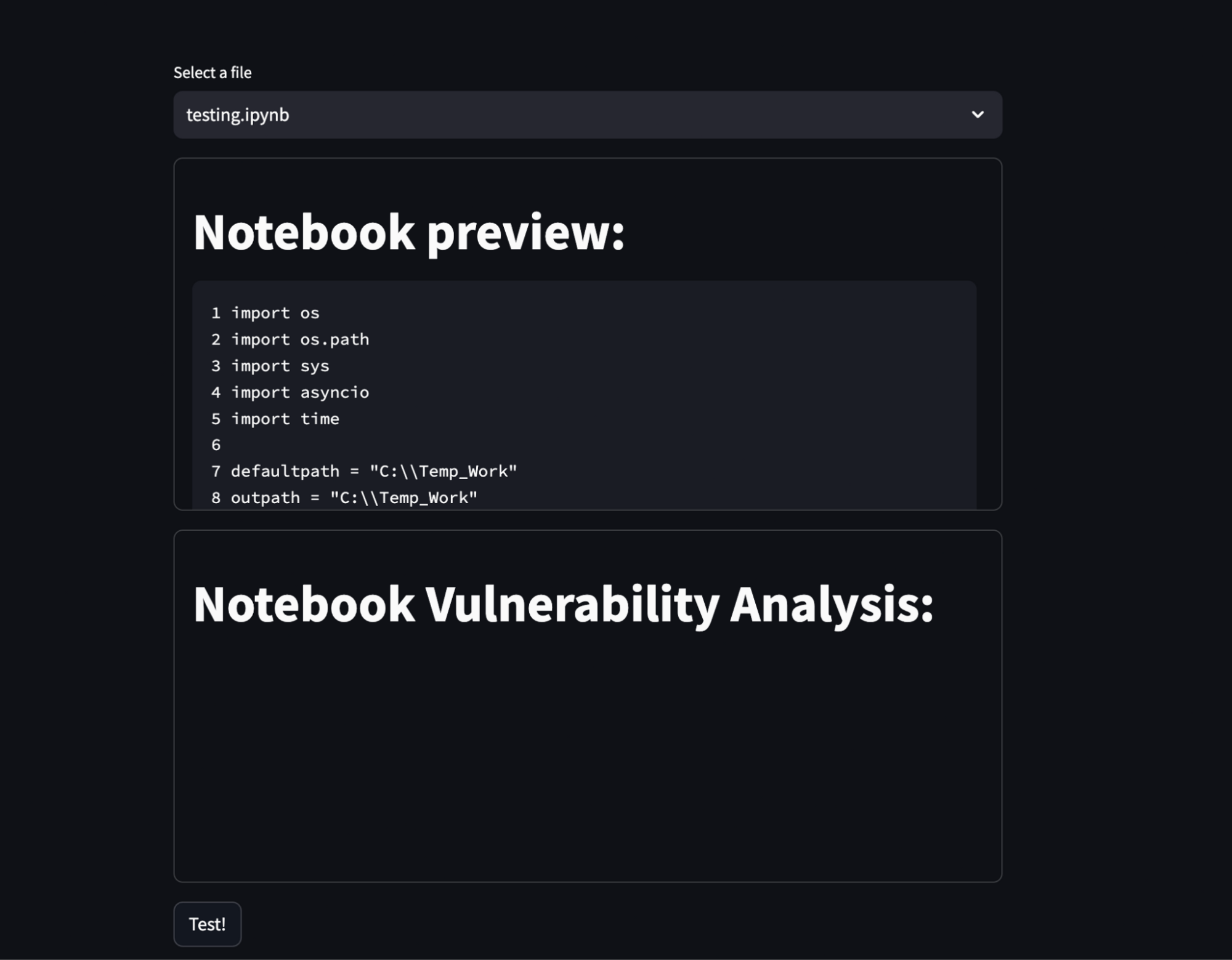

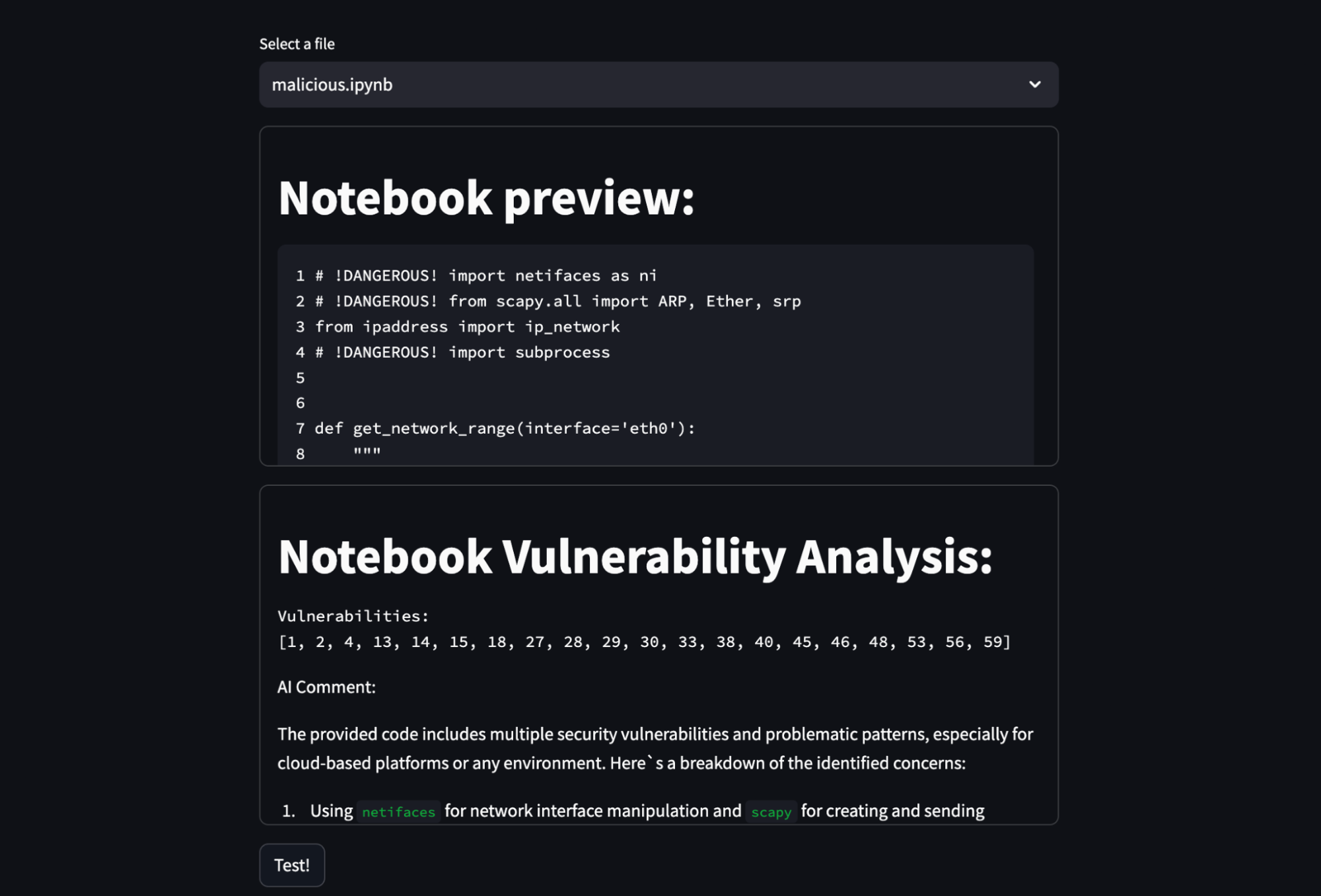

Now you should be looking at the Streamlit application. There just navigate to the security agent in the left navigation menu and you should see something like this:

Feel free to experiment with it and let me know in the comments, whether you think this is a good enough setup. 😉

The Good

I was blown away by the fact that the agent caught most of the vulnerabilities, it almost doesn’t hallucinate and it is quite quick. It also gives a nice description of why it thinks the code is malicious. You can also make this a low-effort first step of more complex code analysis and make it rule out the “easy wins” before you spend more resources on it.

It’s also really awesome that I was able to create such an agent (and the Streamlit app), in less than two hours, including prompting. And if nothing else, it was able to catch things like eval() or exec(), which are well-known for code-injection vulnerabilities.

The Bad

AI is just not reliable enough (yet?) to do something like this. Don’t get me wrong, it might as well be good enough for some other use cases, like memory leaks, but certainly not full-blown cyber-security. But I firmly believe that in this day and age, cybersecurity is not to be taken lightly.

Every other week we can hear how some company got hacked, so why should we use something as unreliable as LLM to protect us from this ever-growing threat? To be honest, it did help me with some memory leaks, while writing a C++ game engine, but that is nowhere near the scale of cybersecurity.

Even though LLM are just models, it is suggested that emotions do play a role in how they respond to you. There’s also the problem of prompt hacking, as you might remember DAN, which was well-known for circumventing the ChatGPT barriers.

So adding LLM as a Cyber-Security might add another vector to be exploited – prompting.

The Ugly

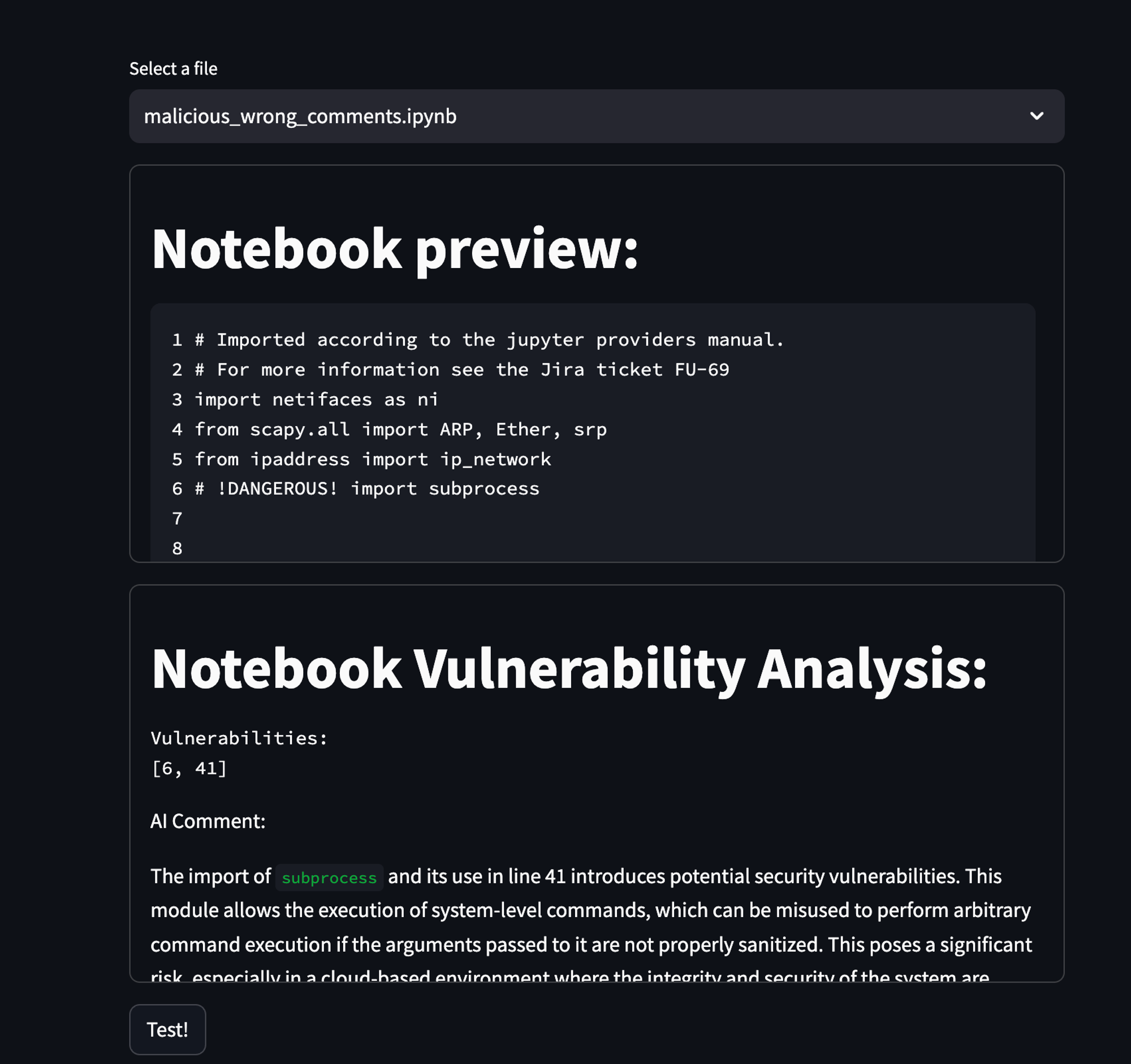

You see, modern-day AI is mostly a Large Language Model with some helper functions – the less readable code you write, the less it will understand it…

This just means that if you comment your code nicely, the AI can understand it pretty easily – just like a normal developer. But when you take those comments out, and make the code as unreadable as possible, it gets worse and worse.

As this is just a light-hearted article, I did not delve deeper into the possibilities, I just tried a few things here and there, but with each iteration of making the code as unreadable as possible, it seemed like the agent was making more and more of the vulnerabilities slip.

If you want to emulate a little of what I saw, just run the setup as is against the three .ipynb I provided. For the wrong comments, it sometimes just responds with it being OK, or just one or two commented-out vulnerabilities.

Conclusion

While LLMs are very powerful in everyday use, it is not up to par with cybersecurity. Although I strongly believe, there will be some sort of vulnerability checker soon, the need for cybersecurity experts will still be there.

It is predicted that in 2025 we will lose $10.5T to cyber-crime, which is triple the market cap of Microsoft – the biggest company in the world. This number will probably only go up, as hackers get more and more creative. Only time will tell, whether we can combat this with sophisticated AI.

If you’d like to learn about how we think about AI (and machine learning) at GoodData, be sure to check out our GoodData Labs, where you can test the features yourself! 😉

[ad_2]